2021-ACL/IJCNLP Prefix-Tuning Optimizing Continuous Prompts for Generation

Motivation

Fine-tuning has to train and store a full copy of the model parameter, which leads to high compution complexity and large storing resource. To address the problem, Prefix-tuning was proposed to optimize a small continuous task-specific vector(called prefix), while keeping the LLM parameters frozen.

Background

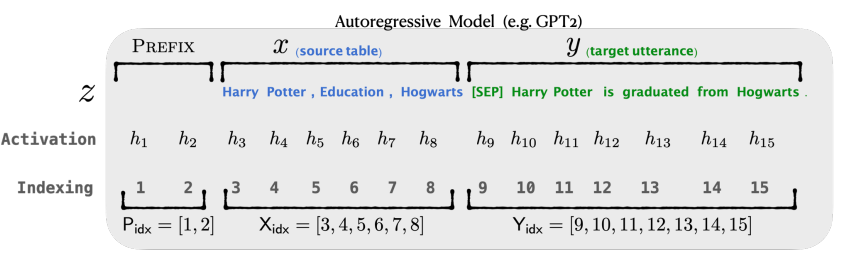

Generally, to make the light-weight finetune, researchers make large efforts on “inserting” the task-specific parameters into LM. There are several types of “inserting” which contains:(1)adapter-tuning:insert layers between LLM’layers;(2)Prompt engineer: design the task-specific prompt. So, it seems like that, PEFT strategy is to learning “Bias”(in downstream tasks). Several general fine-tuning methods are modeled as follows. Such as table-to-text task, $x$ corresponds to a linearized data table and $y$ is a textual description.

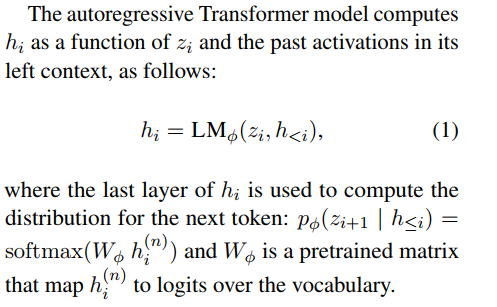

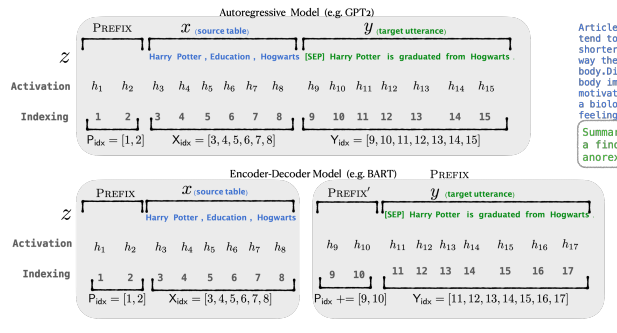

- Autoregressive LM

As shown in the figure above, let $z = [x;y]$ be the concatenation of $x$ and $y$; let ${X{idx}}$ denote the sequence of indices that corresponds to $x$, and ${Y{idx}}$ denote the same for $y$. The activation at time step $i$ is ${h_i} \in {R^d}$, where ${h_i} = \left[ {h_i^{(1)};{\rm{ }}\cdot{\rm{ }}\cdot{\rm{ }}\cdot{\rm{ }};h_i^{(n)}} \right]$ is a concatenation of all activation layers at this time step, and $h_i^{(j)}$ is the activation of the $j$-th Transformer layer at time step $i$.

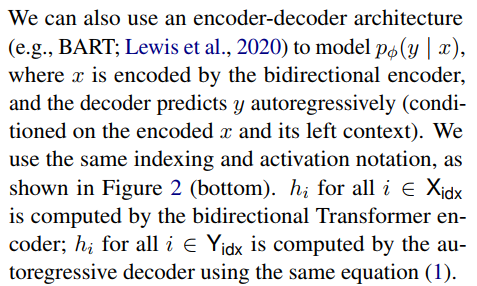

- Encoder-Decoder

- Fine-tuning

Methods

The pipeline of the Prefix-tuning can be seen as follows:

Note:

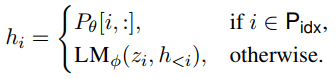

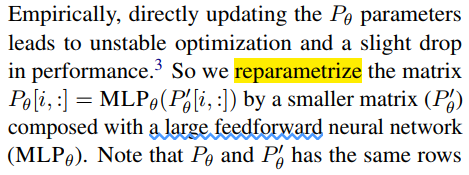

(1) prefix-tuning don’t optimize the ${P_\theta }$ due to performance dropping, it makes through MLP.

(2) It inserts prefix to every layer of Decoder, rather than only in embedding layer.

(3) Random initialization leads to a low performance with high variance! So, Initialize the prefix with activations of real words significantly improves generation.

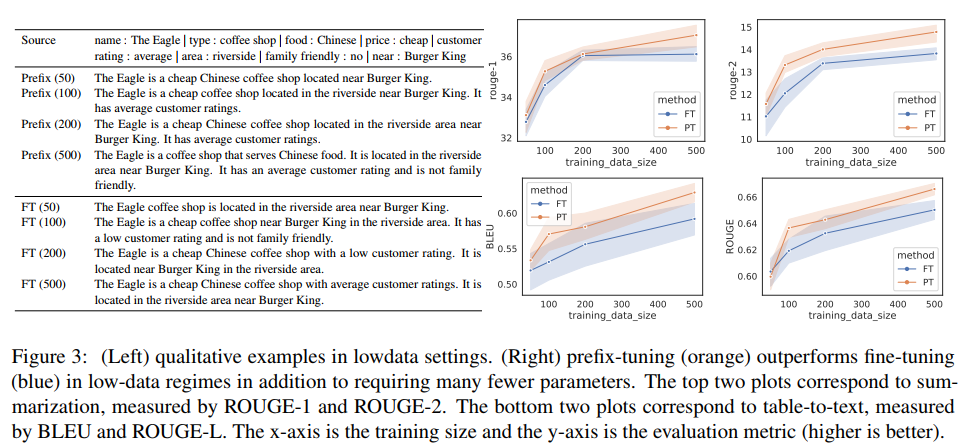

Results

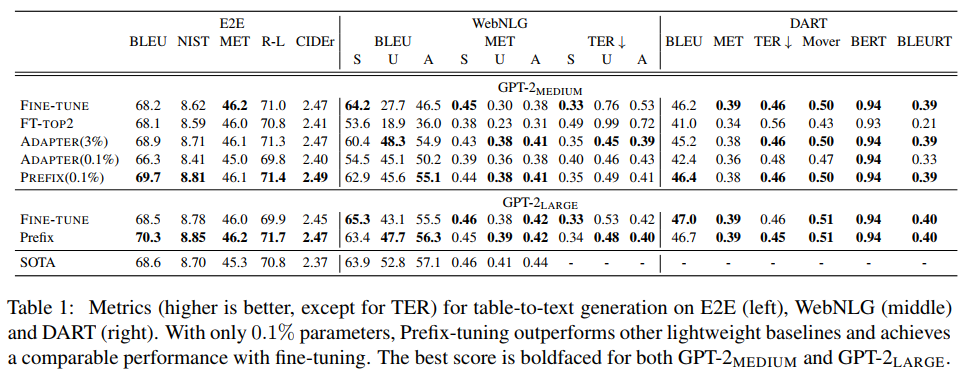

Table-to-Text task

Summarization task