2022-ICLR OMNI-SCALE CNNS A SIMPLE AND EFFECTIVE KERNEL SIZE CONFIGURATION FOR TIME SERIES CLASSIFICATION

Motivation

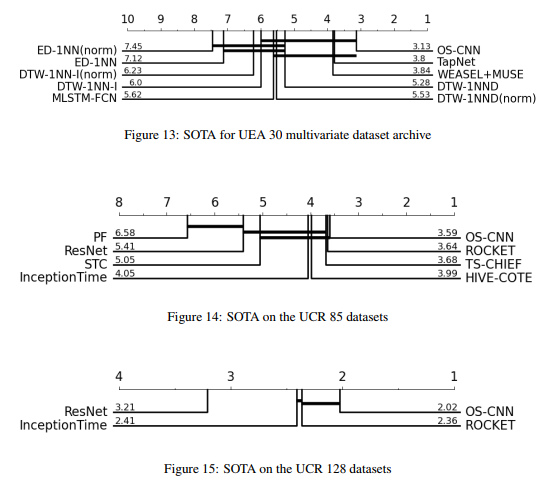

According to the left of fig(1), tuning RF size make substantial influence over acc, and there is no single best RF size across all datasets demonstarted in the right of fig(1). So researchers seeked to design a method to find each best RF size for every datasets. And next, they have found two phenomena as can been below, which help propose the 1D-OS block in the paper.

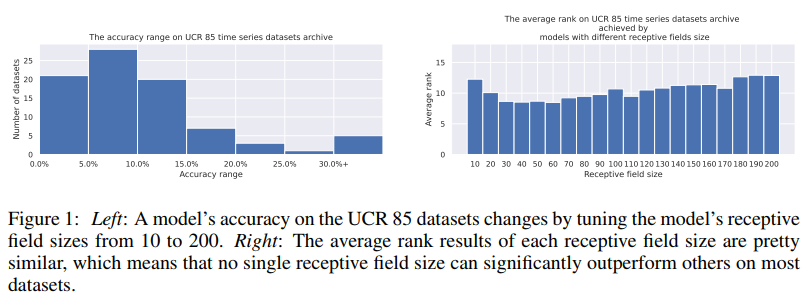

- Phenomenon 1st: according to the left of Fig2,1D-CNNs are not sensitive to the specific kernel size configurations.

- Phenomenon 2nd: according to the right of Fig2,the performance of 1D-CNNs is mainly determined by the best RF size it has.

- note: we can also find that the model’s performance is positive correlation with the model’s RF size.

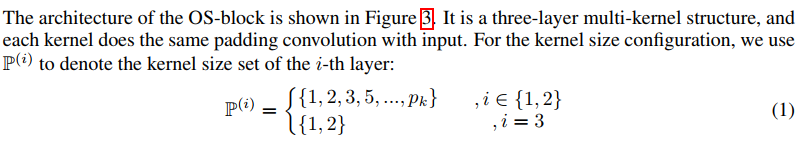

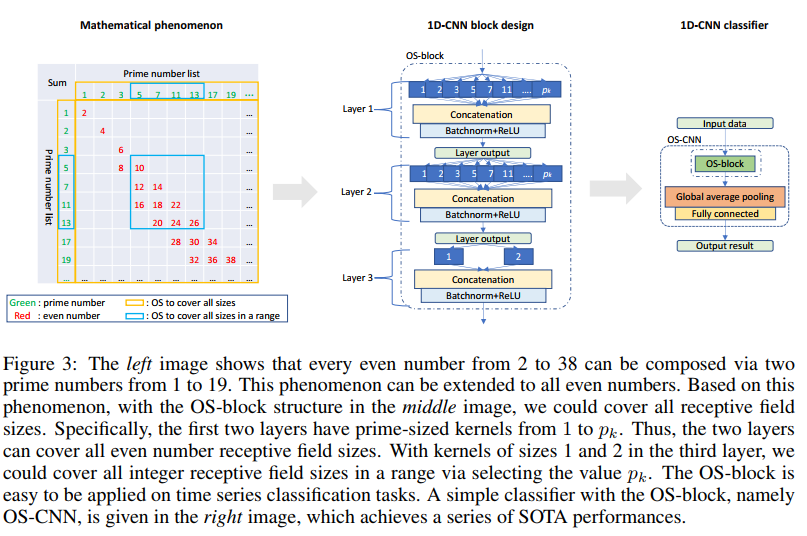

According to the finds above, what we need to do is to design a method which can cover all RF sizes, then it will has similar performance with the best RF size, and there is no additional consider for specific kernel configuration. So, the OS block was proposed based on Goldbach’s conjecture, where any positive even number can be written as the sum of two prime numbers under this background.

Method

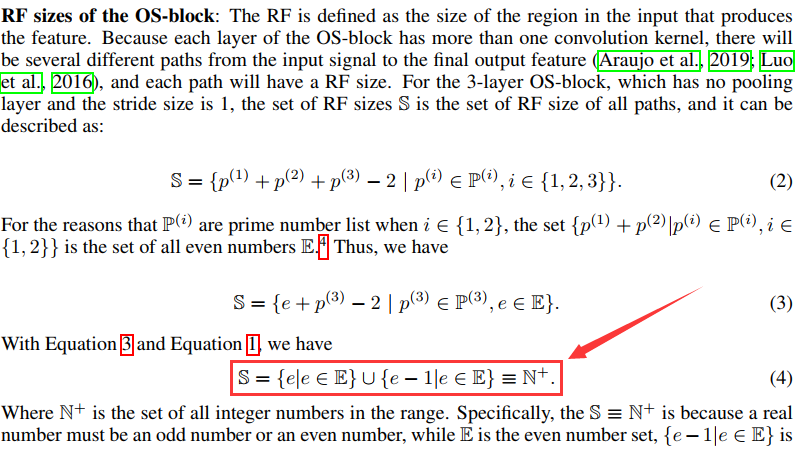

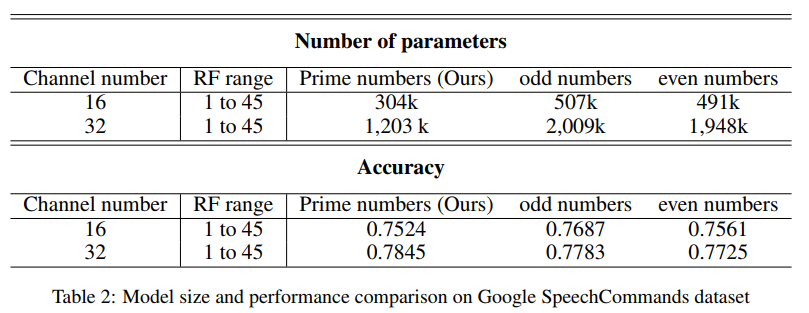

As we can seen in the figure above, the set S cover all interger RF sizes, and this design manner has advantages in terms of model size and scalability for long time series due to the property of prime number which can be seen below:

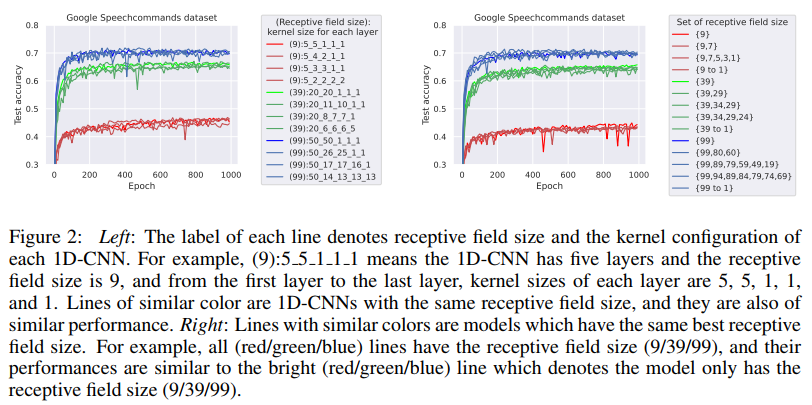

Results