2023-arxiv Enhancing Multivariate Time Series Classifiers through Self-Attention and Relative Positioning Infusion

Motivation

Two novel attention mechanisms were proposed, which contain a relative positive encoding and attention block.

The main contributions:

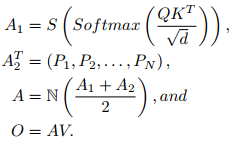

(1)TPS: explicitly model the relative position distance into Gaussian distance!

(2)GTA: just like CA(coordinate attention) block in CV domain!

Method

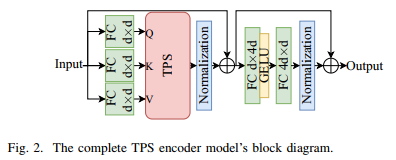

- Temporal Pseudo-Gaussian augmented Self-attention

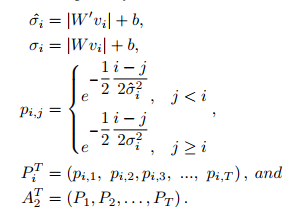

- Global Temporal Attention block

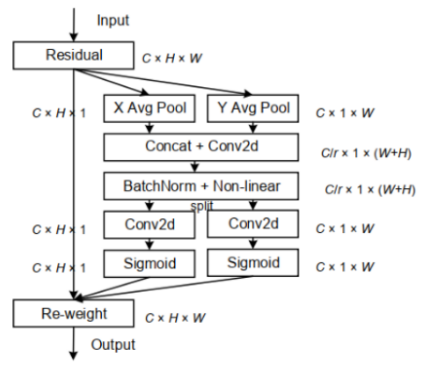

For reference, the structure of CA(coordinate attention) block was as follow:

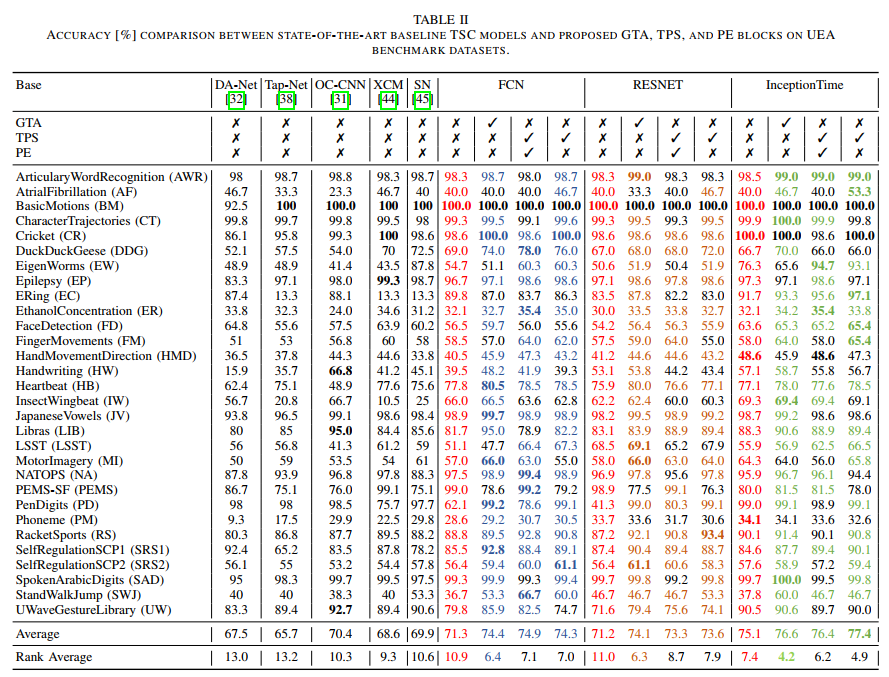

Results

2023-arxiv Enhancing Multivariate Time Series Classifiers through Self-Attention and Relative Positioning Infusion

https://firrice.github.io/posts/2024-06-26-9/