2021-ICLR LORA LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS

Motivation

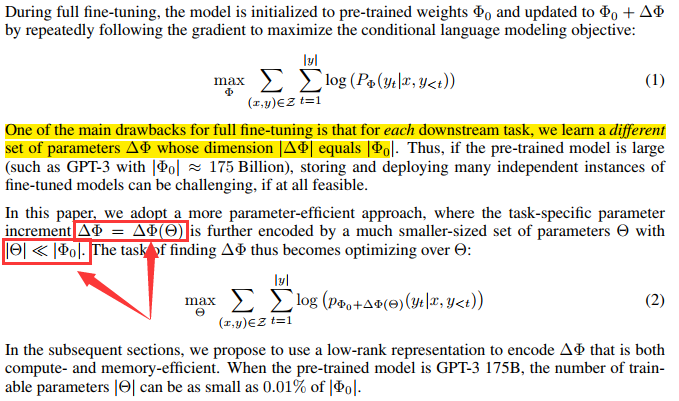

Finetuning full-parameter model is expensive.

Method

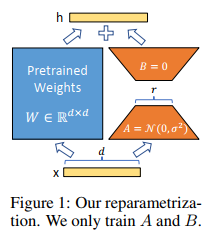

Using the low-rank A&B to represent the finetuned parameters, the detailed pipeline is as follow:

Results

There are really abundant experiments for LoRA, and the detail can reference to the original paper.

2021-ICLR LORA LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS

https://firrice.github.io/posts/2024-06-26-LoRA/